[ad_1]

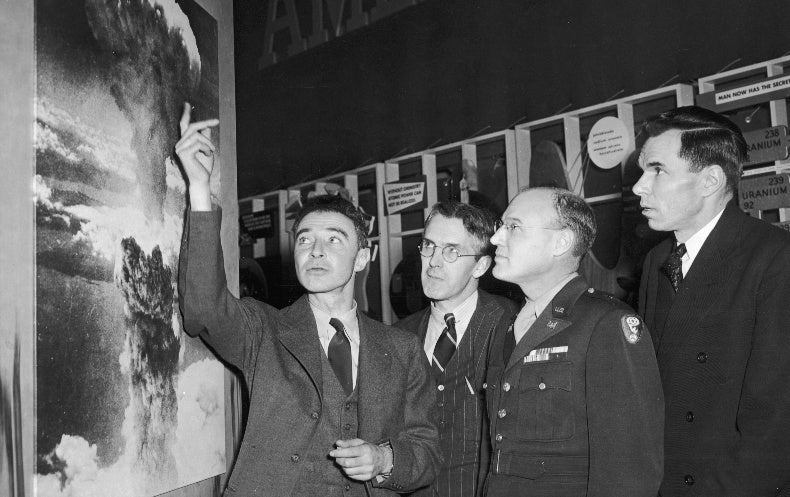

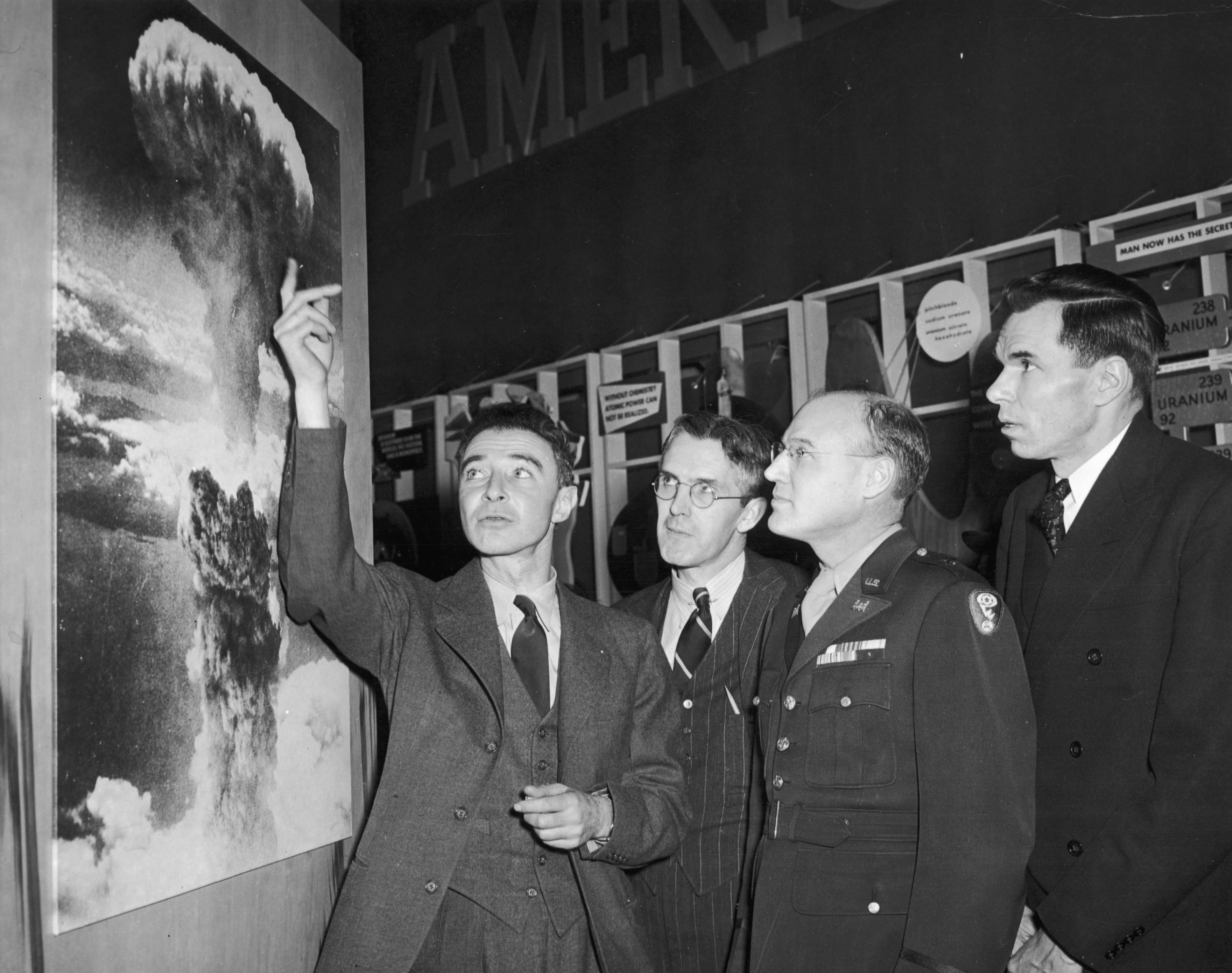

Eighty-a single many years back, President Franklin D. Roosevelt tasked the younger physicist J. Robert Oppenheimer with placing up a secret laboratory in Los Alamos, N.M. Alongside with his colleagues, Oppenheimer was tasked with developing the world’s initially nuclear weapons below the code title the Manhattan Job. A lot less than 3 yrs later on, they succeeded. In 1945 the U.S. dropped these weapons on the inhabitants of the Japanese towns of Hiroshima and Nagasaki, killing hundreds of thousands of individuals.

Oppenheimer became regarded as “the father of the atomic bomb.” Despite his misplaced gratification with his wartime support and technological achievement, he also turned vocal about the need to have to have this unsafe technological know-how.

But the U.S. didn’t heed his warnings, and geopolitical concern as an alternative gained the day. The country raced to deploy at any time additional effective nuclear programs with scant recognition of the huge and disproportionate damage these weapons would result in. Officials also ignored Oppenheimer’s phone calls for better global collaboration to control nuclear technological know-how.

Oppenheimer’s example holds classes for us today, also. We have to not make the identical oversight with artificial intelligence as we made with nuclear weapons.

We are continue to in the early levels of a promised artificial intelligence revolution. Tech providers are racing to develop and deploy AI-driven massive language types, such as ChatGPT. Regulators need to have to hold up.

However AI promises enormous benefits, it has by now exposed its prospective for hurt and abuse. Before this year the U.S. surgeon typical produced a report on the youth mental well being disaster. It located that a person in three teenage girls thought of suicide in 2021. The information are unequivocal: big tech is a large aspect of the problem. AI will only amplify that manipulation and exploitation. The overall performance of AI rests on exploitative labor practices, both domestically and internationally. And enormous, opaque AI products that are fed problematic information often exacerbate present biases in society—impacting every little thing from legal sentencing and policing to overall health care, lending, housing and employing. In addition, the environmental impacts of managing this kind of power-hungry AI designs pressure presently fragile ecosystems reeling from the impacts of climate improve.

AI also promises to make possibly perilous technologies more accessible to rogue actors. Last calendar year researchers requested a generative AI product to design and style new chemical weapons. It developed 40,000 potential weapons in 6 hrs. An earlier edition of ChatGPT produced bomb-generating directions. And a class exercising at the Massachusetts Institute of Technology recently demonstrated how AI can assistance develop synthetic pathogens, which could likely ignite the next pandemic. By spreading accessibility to these kinds of hazardous information and facts, AI threatens to develop into the computerized equivalent of an assault weapon or a large-capacity journal: a car or truck for a single rogue man or woman to unleash devastating harm at a magnitude never seen right before.

Yet companies and personal actors are not the only kinds racing to deploy untested AI. We should also be cautious of governments pushing to militarize AI. We previously have a nuclear launch system precariously perched on mutually assured destruction that provides environment leaders just a few minutes to make a decision whether to launch nuclear weapons in the scenario of a perceived incoming assault. AI-run automation of nuclear launch units could quickly clear away the observe of having a “human in the loop”—a needed safeguard to make certain defective computerized intelligence does not lead to nuclear war, which has arrive close to going on many situations already. A armed forces automation race, built to give determination-makers increased ability to react in an increasingly complex entire world, could guide to conflicts spiraling out of command. If nations rush to undertake militarized AI technological know-how, we will all drop.

As in the 1940s, there is a vital window to form the growth of this emerging and possibly dangerous technology. Oppenheimer identified that the U.S. should perform with even its deepest antagonists to internationally handle the harmful facet of nuclear technology—while nonetheless pursuing its tranquil uses. Castigating the gentleman and the strategy, the U.S. as a substitute kicked off a wide chilly war arms race by acquiring hydrogen bombs, alongside with relevant highly-priced and sometimes bizarre delivery units and atmospheric screening. The resultant nuclear-industrial complicated disproportionately harmed the most vulnerable. Uranium mining and atmospheric testing prompted cancer among the teams that bundled residents of New Mexico, Marshallese communities and users of the Navajo Nation. The wasteful paying out, prospect price and impact on marginalized communities had been incalculable—to say practically nothing of the various shut phone calls and the proliferation of nuclear weapons that ensued. Nowadays we have to have the two international cooperation and domestic regulation to assure that AI develops safely.

Congress need to act now to control tech corporations to make certain that they prioritize the collective community curiosity. Congress need to commence by passing my Children’s On the net Privacy Protection Act, my Algorithmic Justice and On the web Transparency Act and my bill prohibiting the start of nuclear weapons by AI. But that is just the commencing. Guided by the White House’s Blueprint for an AI Monthly bill of Legal rights, Congress requires to move wide regulations to stop this reckless race to make and deploy unsafe artificial intelligence. Choices about how and exactly where to use AI can not be remaining to tech firms by yourself. They should be built by centering on the communities most susceptible to exploitation and harm from AI. And we should be open to functioning with allies and adversaries alike to keep away from equally military services and civilian abuses of AI.

At the start out of the nuclear age, somewhat than heed Oppenheimer’s warning on the risks of an arms race, the U.S. fired the setting up gun. Eight many years afterwards, we have a moral obligation and a very clear fascination in not repeating that miscalculation.

This is an belief and examination posting, and the views expressed by the writer or authors are not always these of Scientific American.

[ad_2]

Supply hyperlink