[ad_1]

Numerous robots keep track of objects by “sight” as they function with them, but optical sensors can not take in an item’s total condition when it really is in the dark or partly blocked from look at. Now a new reduced-price strategy allows a robotic hand “feel” an unfamiliar object’s form—and deftly manage it based mostly on this information and facts on your own.

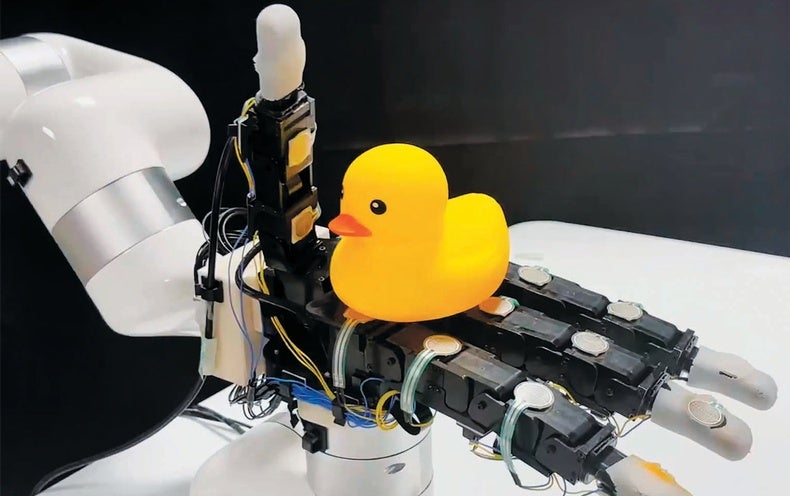

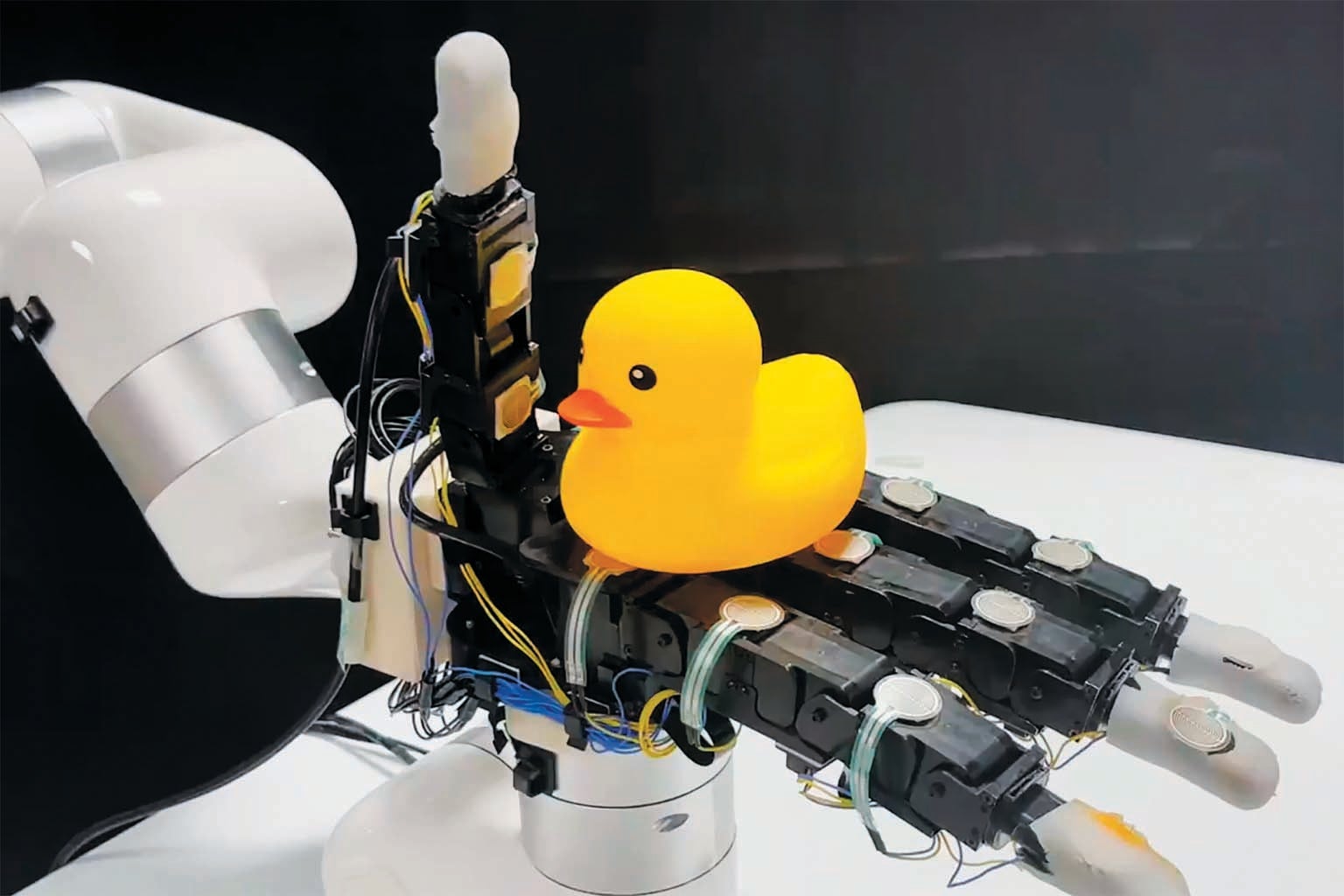

University of California, San Diego, roboticist Xiaolong Wang and his workforce wished to obtain out if intricate coordination could be realized in robotics working with only simple touch facts. The researchers hooked up 16 get hold of sensors, each and every costing about $12, to the palm and fingers of a 4-fingered robot hand. These sensors only suggest if an object is touching the hand or not. “While one sensor would not catch significantly, a whole lot of them can help you seize diverse facets of the object,” Wang says. In this circumstance, the robot’s job was to rotate products placed in its palm.

The scientists to start with ran simulations to accumulate a significant quantity of contact information as a virtual robotic hand practiced rotating objects, together with balls, irregular cuboids and cylinders. Making use of binary call info (“touch” or “no touch”) from every sensor, the group designed a computer system design that establishes an object’s posture at each individual phase of the managing procedure and moves the fingers to rotate it easily and stably.

Upcoming they transferred this capacity to operate a genuine robotic hand, which successfully manipulated formerly unencountered objects this kind of as apples, tomatoes, soup cans and rubber ducks. Transferring the computer model to the genuine world was comparatively straightforward for the reason that the binary sensor facts had been so easy the product did not rely on correctly simulated physics or specific measurements. “This is significant considering that modeling higher-resolution tactile sensors in simulation is still an open up trouble,” suggests New York University’s Lerrel Pinto, who studies robots’ interactions with the genuine planet.

Digging into what the robot hand perceives, Wang and his colleagues observed that it can re-build the entire object’s type from touch info, informing its steps. “This reveals that you will find sufficient information from touching that permits reconstructing the object condition,” Wang says. He and his crew are set to existing their handiwork in July at an intercontinental conference identified as Robotics: Science and Techniques.

Pinto miracles irrespective of whether the program would falter at much more intricate jobs. “During our experiments with tactile sensors,” he states, “we observed that tasks like unstacking cups and opening a bottle cap were substantially harder—and probably far more useful—than rotating objects.”

Wang’s group aims to tackle much more sophisticated movements in upcoming do the job as properly as to include sensors in places these as the sides of the fingers. The scientists will also consider introducing eyesight to enhance touch details for managing challenging shapes.

[ad_2]

Supply url